When you see a picture, you can easily describe it; because you recognize and categorize the contents of that picture. Any other person looking at the same picture may describe it a little different but both of them would be similar.

Imagine a computer to do the same; well actually you don’t need to imagine as the search giant Google has came up with a new artificial intelligence software which can not only recognize the scene in a photograph but also describe it.

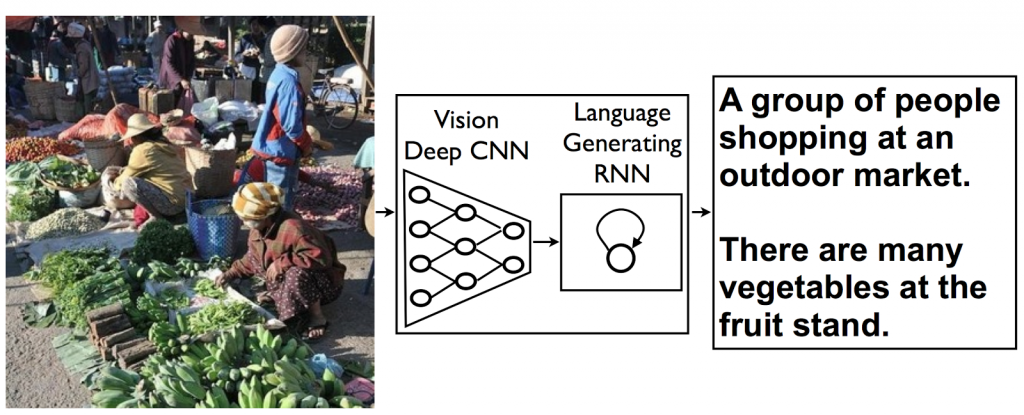

The idea for this new system comes from the advancement in machine translation between languages, where a RNN (Recurrent Neural Network) transforms a sentence into vector representation and a secondary RNN use this vector representation to generate the sentence in another language.

So now the researcher have replace the first RNN and its input words with a deep Convolutional Neural Network (CNN) trained to classify objects in images and then feed the CNN data into an RNN designed to produce phrases.

Experiments have been done with this system on several openly published datasets, including Pascal, Flickr8k, Flickr30k and SBU. The system isn’t perfect yet but the results are very reasonable.

This kind of system could eventually help visually impaired people understand pictures, provide alternate text for images in parts of the world where mobile connections are slow, and make it easier for everyone to search on Google for images.